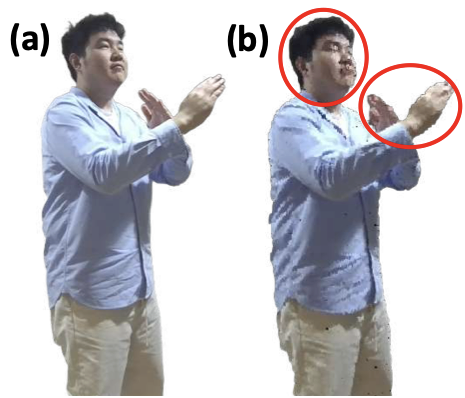

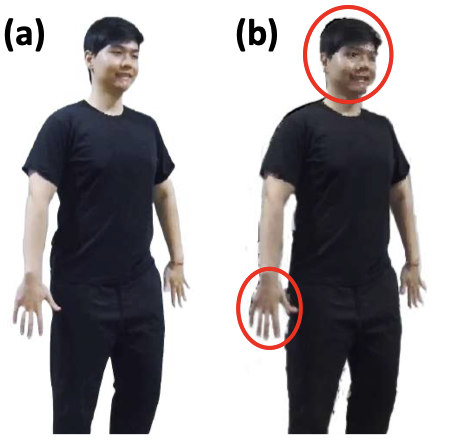

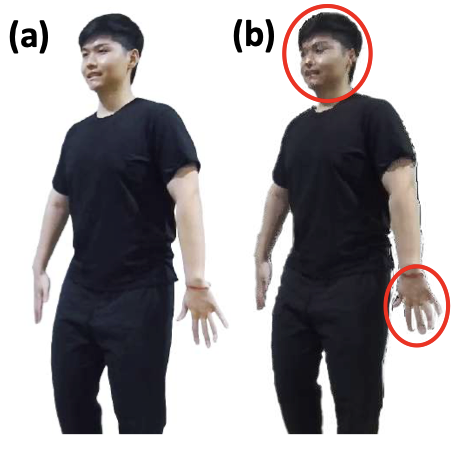

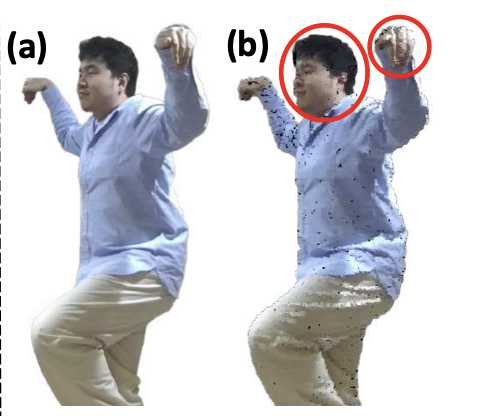

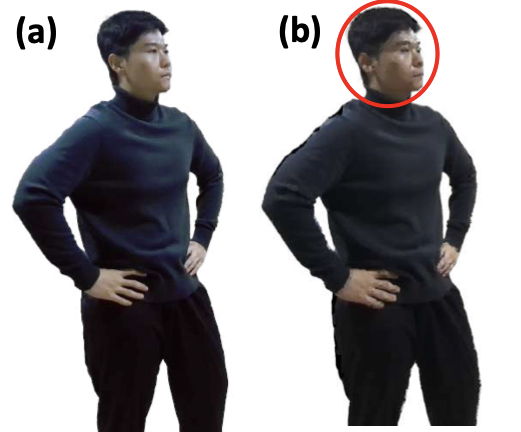

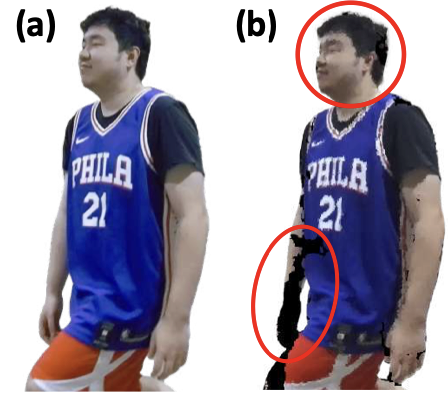

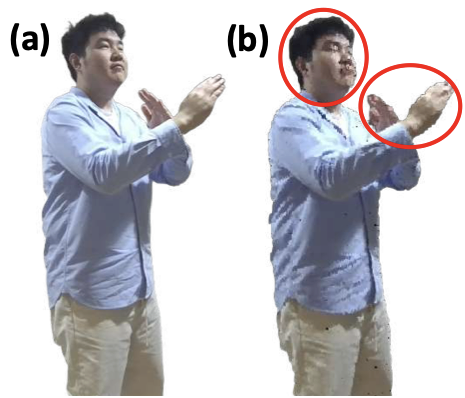

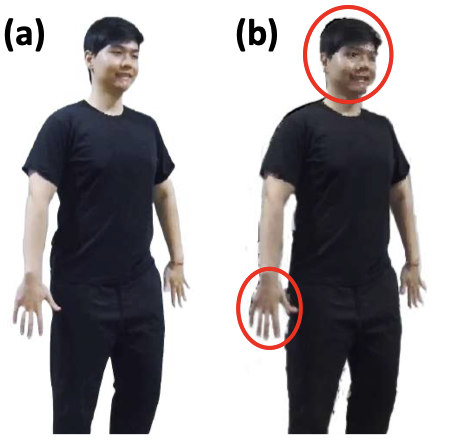

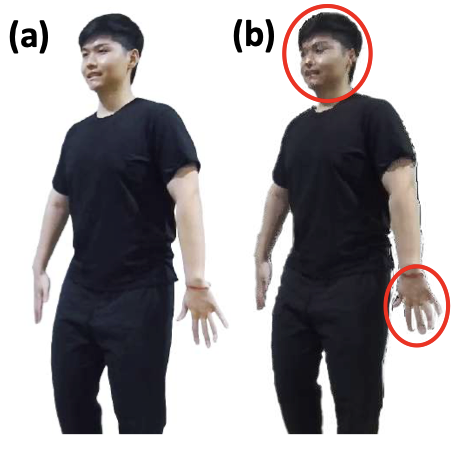

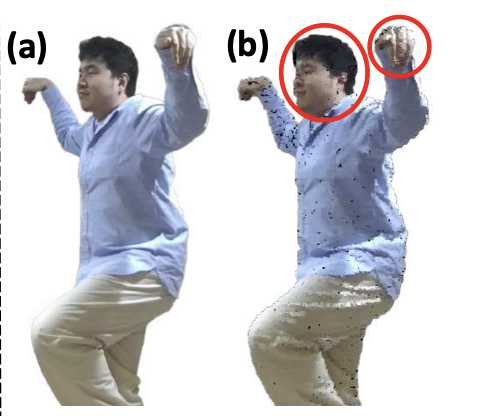

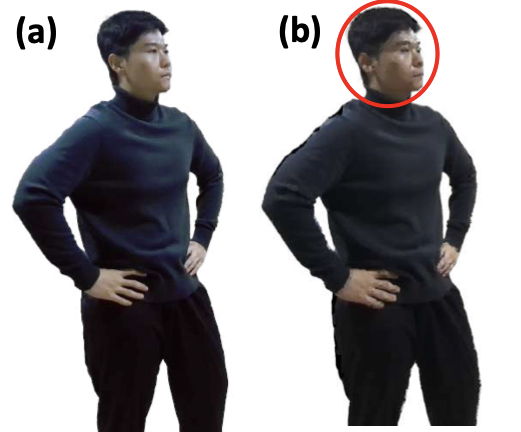

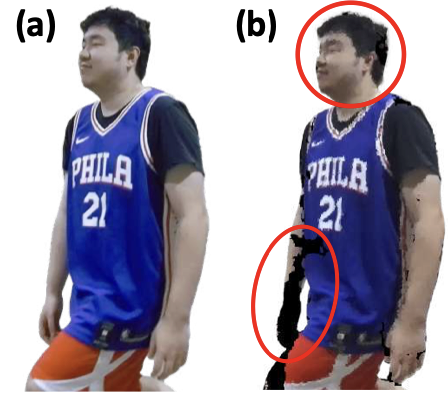

Immersive telepresence has the potential to revolutionize remote communication by offering a highly interactive and engaging user experience. However, state-of-the-art exchanges large volumes of 3D content to achieve satisfactory visual quality, resulting in sub- stantial Internet bandwidth consumption. To tackle this challenge, we introduce MagicStream, a first-of-its-kind semantic-driven im- mersive telepresence system that effectively extracts and delivers compact semantic details of captured 3D representation of users, instead of traditional bit-by-bit communication of raw content. To minimize bandwidth consumption while maintaining low end- to-end latency and high visual quality, MagicStream incorporates the following key innovations: (1) efficient extraction of user’s skin/cloth color and motion semantics based on lighting character- istics and body keypoints, respectively; (2) novel, real-time human body reconstruction from motion semantics; and (3) on-the-fly neu- ral rendering of users’ immersive representation with color seman- tics. We implement a prototype of MagicStream and extensively evaluate its performance through both controlled experiments and user trials. Our results show that, compared to existing schemes, MagicStream can drastically reduce Internet bandwidth usage by up to 1195×while maintaining good visual quality

@inproceedings{phan2024fast,

title={Fast and Interpretable Face Identification for Out-Of-Distribution Data Using Vision Transformers},

author={Phan, Hai and Le, Cindy X and Le, Vu and He, Yihui and Nguyen, Anh and others},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={6301--6311},

year={2024}

}